AWS Redshift Data Sharing – Setup and Key Use Cases

Davor Turkalj

/ 2022-05-11Introduction

Every organization has multiple teams – departments which often have to share data in order to promote unified and accurate decisions about their product or services. Within a classic Data Warehouse, Data Sharing can be time consuming, stressful and often dependent on DBAs additional tooling. Additionally, when it comes to accurate testing, users often have to move data themselves or announce well ahead when they will need that data so that DBAs can prepare a testing environment for them.

Finally, AWS has come up with a solution. Amazon Data Sharing is now generally available and with this feature users can display data created in one cluster to multiple other clusters without any data movement or replication systems you need to buy and set up. Although this is a feature that AWS provides and markets as very simple to use, there are a few things you need to know to set it up and configure correctly, and this article aims to explain how to do so properly

How to Set up Data Sharing

Before configuring your shared cluster, here are a few things to consider:

- You can only utilize this feature if your Redshift is running on an RA3 cluster type. This is because RA3 clusters use managed storage and therefore can share their data with other clusters. You can read more about RA3 here.

- Shared data is read only. This means that you can’t modify any of the data that is made available to you via data sharing.

- You should use this only within the same AWS account, otherwise you will have to make your PROD cluster publicly accessible in order for it to work cross-accounts. This will have security implications for your environment.

Now we can start with the actual setup and configuration of your shared cluster.

First thing you need to do is estimate the size of the new cluster. You can make this estimation based on the system that will use this shared cluster as well as the number of users you will have. This information should already be available to you in your current production cluster. Analyze your current workload and users that will move to the new cluster while also analyzing any of the current issues that you would like to eliminate by this move.

Consider this when creating your new cluster:

If your new cluster needs to be smaller than your current cluster, create the new cluster the same size as your production and then use elastic resize to go down to the number of nodes you need. This will give you a bigger number of data slices for a much better performance when it comes to reading the data.

With your new consumer cluster up and running, you can start with data sharing setup.

Shared Cluster Setup

This process starts on your Production cluster where you need to first create the data share.

1. CREATE DATASHARE ReportingDatashare;

Second step is to add all the schemas tables and views you want to share with the other cluster, the consumer cluster.

Note: You always start by first adding schemas you will share and then tables and views.

2. ALTER DATASHARE ReportingDatashare ADD SCHEMA reporting_datamart;

3. ALTER DATASHARE ReportingDatashare ADD TABLE reporting_datamart.sales;

If you want to share all objects within one schema you can run:

ALTER DATASHARE SalesDatashare ADD ALL TABLES IN SCHEMA reporting_datamart;

In case you wish to remove some of the tables from your data share, you can do this by running the statement below:

ALTER DATASHARE ReportingDatashare REMOVE TABLE schema_name.table_name;

Once you have added all the objects you wish to share to data share, you should then configure permissions. In order for your consumer cluster to see and use data share created in previous steps, you will need to run the GRANT permission and you will also need the cluster namespace GUID of your consumer cluster. There are 2 ways of obtaining this information, first by running SQL statement

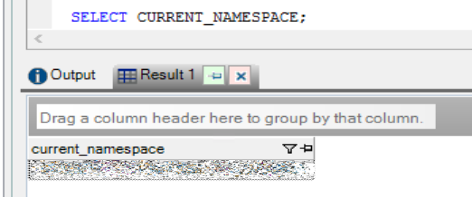

SELECT CURRENT_NAMSEPACE;

on your consumer cluster

Option number two is to go to your Redshift Web console where you can find it under General information.

Now that you have the name space of your consumer cluster, you can run the GRANT command on your Production cluster in order to allow consumer cluster to access the data share:

GRANT USAGE ON DATASHARE ReportingDatashare TO NAMESPACE '111111-xxxx-4bf3-3333-ababababab';

If you wish to review all objects added to your data share, you can run the below SQL statement that will give you a detailed list of all objects in your data share.

DESC DATASHARE ReportingDatashare;

With this, we have finished our steps on Production cluster and now we move to our consumer cluster. In the consumer cluster, we will first create a new local database from the data share we created in previous steps. Similar to the step where we granted usage to our consumer cluster, here we will need namespace of our Production cluster. Use one of the methods described earlier to obtain the cluster namespace while remembering you need the Production namespace.

CREATE DATABASE Sales from DATASHARE ReportingDatashare OF NAMESPACE '33333-1287-4vf3-8cw2-xaxaxaxax';

Since this is a new object in your Consumer cluster, don’t forget to grant usage access to this database to your users and groups.

Querying Shared Data

Now you can start querying your shared data in your Consumer cluster. This can be done in 3 ways.

1 After connecting to your local database in that cluster, you can query shared objects using a three-part notation similar to querying any other local database object, using the notation

<database>.<schema>.<view/table>:

select count(*) from sales.reporting_datamart.sales;

2 Create schemas in your local database on the consumer cluster and then build views based on tables from your shared database.

CREATE VIEW reporting_datamart.sales AS SELECT * FROM sales.reporting_datamart.sales with no schema binding;

3 Create external schemas under your local database based on your shared database.

CREATE EXTERNAL SCHEMA 'local_schema_name' FROM REDSHIFT DATABASE 'shared_database_name' SCEHMA 'shared_schema_name'

In our case that would be:

CREATE EXTERNAL SCHEMA 'reporting_datamart' FROM REDSHIFT DATABASE 'sales' SCHEMA 'reporting_datamart'

Note: For this option you won't need to create any additional objects (tables, views) of this schema since they will be automatically created.

Use Cases and How to Choose the Right Setup

While taking into consideration your reporting tool, other advanced analytics tools, and the workload you anticipate, you need to select one of the 3 options of querying your shared data on the Consumer cluster.

Option 1, where you query the data using a three-part notation is the best if you are starting the work from scratch and every query, report, and dashboard is created as new. If you bring anything that has been developed in your Production cluster, you will have to adjust every query so it would use thethree-part notation. Keep in mind that you can’t connect to this database - you would also need to adjust your connections to use a local database in that cluster.

Option 2 is perhaps the best option since it does not bring any limitations to your work. If you are moving existing work from your Production cluster to the Consumer cluster, make sure you name all your schemas the same as they are named in Production, and also name all your views the same name as the tables or views that you have in Production cluster. This way you will not have to edit any of your existing queries and everything you already have developed will continue to work in the Consumer cluster.

The downside of this approach is that you must create all this views in your local schemas to use them. In cases of a large number of database objects, this could be time-consuming.

Since this will be an external schema, you will not be able to create any other object in this schema. This could be a problem since some tools, like MicroStrategy, create temporary tables as part of their execution. This can lead to complicated adjustments on the side of the tool in form of forcing the tool to use some other local schema for all temporary tables. Also, even if your tools do not need temporary tables, it could be limiting for some of the users since they will not be able to create or use any other objects in these schemas.

AWS Redshift data sharing is an excellent way of segregating different workloads as well as securely sharing your Production data with different departments within the same company. You need to know exactly what your uses cases will be in order to set it up correctly. Otherwise, you could find yourself a couple of months into a new project just to realize that your initial configuration will not work for some new use-cases, and you will have to start over from the beginning. So, make sure you do your due diligence to understand what your shared cluster will be used for so you can choose the best setup for your requirements.

If you are interested in AWS topics, check out our other related blog posts!